It wouldn’t be a Microsoft Build without a bunch of new capabilities for Azure Cognitive Services, Microsoft’s cloud-based AI tools for developers.

The first new feature is what Microsoft calls the “personalized apprentice mode,” which allows the existing Personalizer API to learn about user preferences in real time and in parallel with existing apps, all without being exposed to the user until it reaches pre-set performance goals.

With this update, the Cognitive Services Speech Service, the unified Azure API for speech-to-text, text-to-speech and translation, is coming to 27 new locales and the company promises a 20 percent reduction in word error rates for its speech transcription services. For the Neural Text to Speech service, Microsoft promises that it has reduced the pronunciation error rate by 50 percent and it’s now bringing this service to 11 new locales with 15 new voices, too. It is also adding a pronunciation assessment to the service.

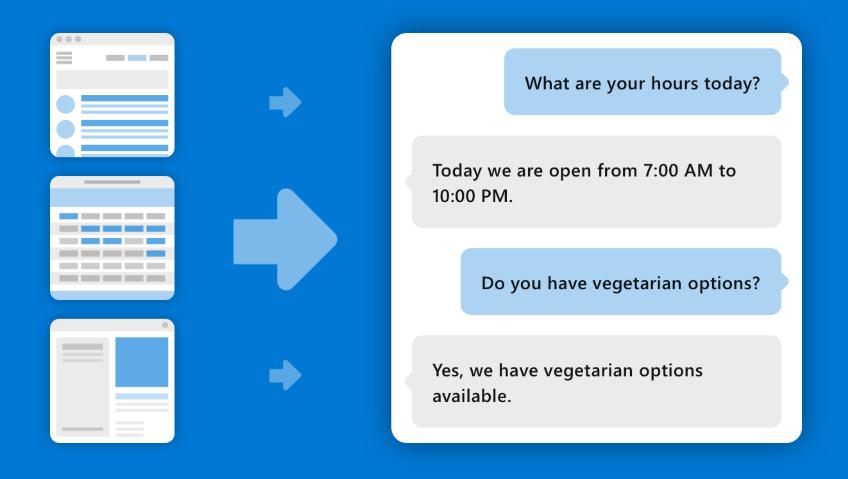

Also new is an addition to QnA Maker, a no-code service that can automatically read FAQs, support websites, product manuals and other documents and turn them into Q&A pairs. In the process, it creates something akin to a knowledge base, which users can now also collaboratively edit with the addition of role-based access control to the service.

Also new is an addition to QnA Maker, a no-code service that can automatically read FAQs, support websites, product manuals and other documents and turn them into Q&A pairs. In the process, it creates something akin to a knowledge base, which users can now also collaboratively edit with the addition of role-based access control to the service.

In addition, Azure Cognitive Search, a related service that focuses on — you guessed it — search, is also getting a couple of new capabilities. Using the same natural language understanding engine that powers Bing and Microsoft Office, Azure Cognitive Search is now getting a new custom search ranking feature (in preview), that allows users to build their own search rankings based on their specific needs. As Microsoft notes, a home improvement retailer could use this to build its own search ranking system to augment the existing Cognitive Search results.

from Microsoft – TechCrunch https://techcrunch.com/2020/05/19/azure-cognitive-services-learns-more-languages/

No comments:

Post a Comment