If these past few weeks felt like the sky was falling, you weren’t alone.

In the past month there were several major internet outages affecting millions of users across the world. Sites buckled, services broke, images wouldn’t load, direct messages ground to a halt and calendars and email were unavailable for hours at a time.

It’s not believed any single event tied the outages together, more so just terrible luck for all involved.

It started on June 2 — a quiet Sunday — when most weren’t working. A massive Google Cloud outage took out service for most on the U.S. east coast. Many third-party sites like Discord, Snap and Vimeo, as well as several of Google’s own services, like Gmail and Nest, were affected.

A routine but faulty configuration change was to blame. The issue was meant to be isolated to a few systems but a bug caused the issue to cascade throughout Google’s servers, causing gridlock across its entire cloud for more than three hours.

On June 24, Cloudflare dropped 15% of its global traffic during an hours-long outage because of a network route leak. The networking giant quickly blamed Verizon (TechCrunch’s parent company) for the fustercluck. Because of inherent flaws in the border gateway protocol — which manages how internet traffic is routed on the internet — Verizon effectively routed an “entire freeway down a neighborhood street,” said Cloudflare in its post-mortem blog post. “This should never have happened because Verizon should never have forwarded those routes to the rest of the Internet.”

Amazon, Linode and other major companies reliant on Cloudflare’s infrastructure also ground to a halt.

A week later, on July 2, Cloudflare was hit by a second outage — this time caused by an internal code push that went badly. In a blog post, Cloudflare’s chief technology officer John Graham-Cumming blamed the half-hour outage on a rogue bit of “regex” code in its web firewall, designed to prevent its customer sites from getting hit by JavaScript-based attacks. But the regex code was bad and caused its processors to spike across its machines worldwide, effectively crippling the entire service — and any site reliant on it. The code rollback was swift, however, and the internet quickly returned to normal.

Google, not wanting to out-do Cloudflare, was hit by another outage on July 2 thanks to physical damage to a fiber cable in its U.S. east coast region. The disruption lasted for about six hours, though Google says most of the disruption was mitigated by routing traffic through its other data centers.

Then, Facebook and its entire portfolio of services — including WhatsApp and Instagram — stumbled along for eight hours during July 3 as its shared content delivery network was hit by downtime. Facebook took to Twitter, no less, to confirm the outage. Images and videos across the services wouldn’t load, leaving behind only the creepy machine learning-generated descriptions of each photo.

Instagram was one of the many Facebook-owned services hit by an outage this week, with several taking to Twitter noting the automatic tagging and categorization of images (Image: Derek Kinsman/Twitter)

At about the same time, Twitter too had to face the music, admitting in a tweet that direct messages were broken. Some complained of “ghost” messages that weren’t there. Some weren’t getting notified of new messages at all.

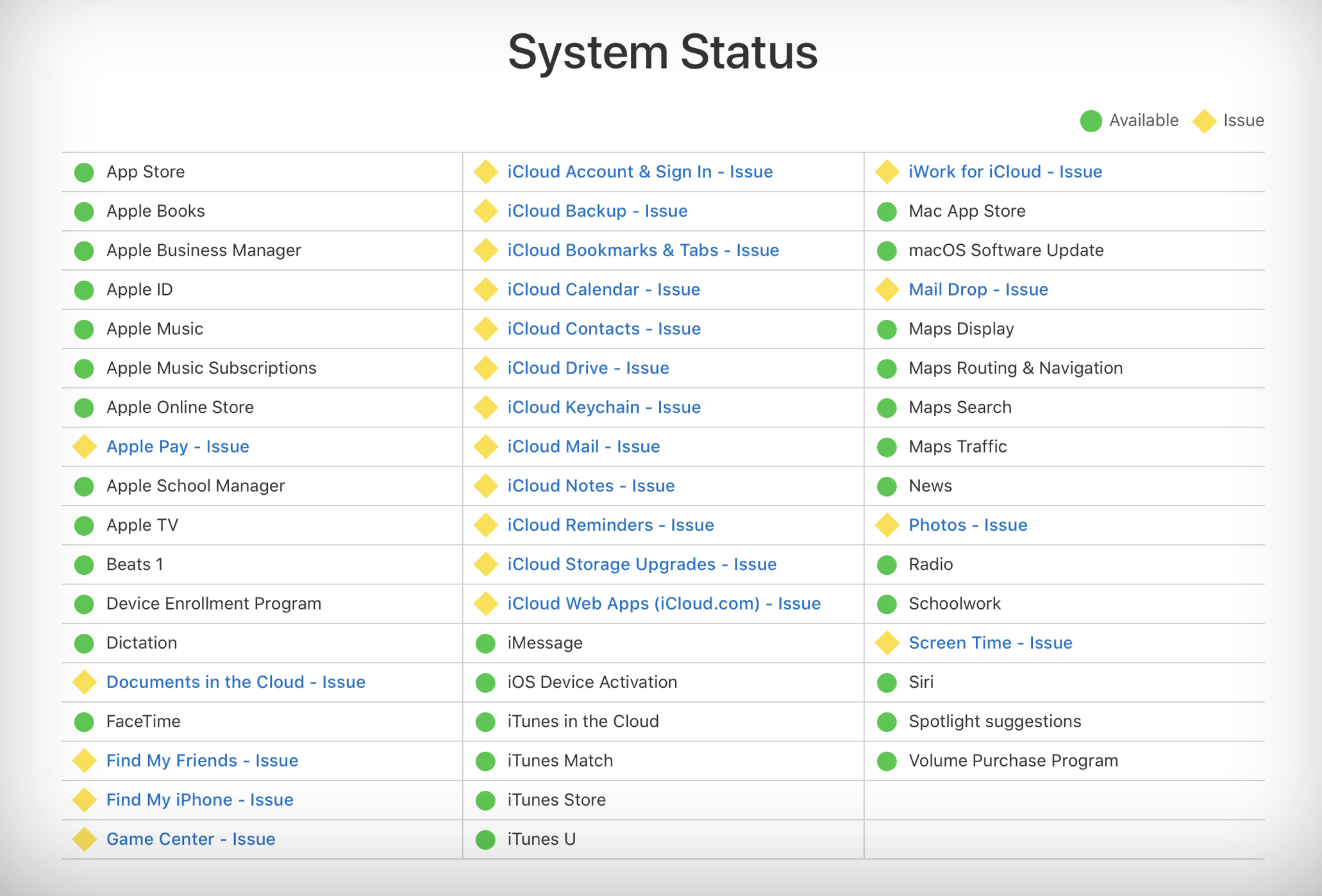

Then came Apple’s turn. On July 4, iCloud was hit by a three-hour nationwide outage, affecting almost every part of its cloud-based service — from the App Store, Apple ID, Apple Pay and Apple TV. In some cases, users couldn’t access their cloud-based email or photos.

According to internet monitoring firm ThousandEyes, the cause of the outage was yet another border gateway protocol issue — similar to Cloudflare’s scuffle with Verizon.

Apple’s nondescript outage page; it acknowledges issues, but not why or for how long (Image: TechCrunch)

It was a rough month for a lot of people. Points to Cloudflare and Google for explaining what happened and why. Less so to Apple, Facebook and Twitter, all of which barely acknowledged their issues.

What can we learn? For one, internet providers need to do better with routing filters, and, secondly, perhaps it’s not a good idea to run new code directly on a production system.

These past few weeks have not looked good for the cloud, shaking confidence in the many reliant on hosting giants — like Amazon, Google and more. Although some quickly — and irresponsibly and eventually wrongly — concluded the outages were because of hackers or threat actors launching distributed denial-of-service attacks, it’s always far safer to assume that an internal mistake is to blame.

But for the vast majority of consumers and businesses alike, the cloud is still far more resilient — and better equipped to handle user security — than most of those who run their own servers in-house.

The easy lesson is to not put all your eggs in one basket — or your data in a single cloud. But as this month showed, sometimes you can be just plain unlucky.

from Amazon – TechCrunch https://techcrunch.com/2019/07/05/bad-month-for-the-internet/

No comments:

Post a Comment